From its humble beginnings in 2012, Amazon re:Invent has come a long way. This year, it attracted over 60,000 participants worldwide and took centerstage in Las Vegas. Along the way, Amazon Web Services (AWS) has become a public cloud service provider that leads in revenue market share and customer mind share. Amazon highlighted the use of its technology in sports and several industries. As each industry faces different challenges, categorizing Amazon’s technology value by industry helps customers realize the applicability and return on investment to their organization. Amazon showcased partners at several sessions, recognizing each partner’s importance to customer success. Partners were also in the expo hall, demonstrating their contribution to customer success using AWS. During the analyst briefing at the event, AWS articulated several investment areas for innovation. This write-up breaks out the investments, followed by a deep dive into Amazon’s AI strategy and a summary.

During the analyst briefing, AWS articulated four areas of investment described below:

1. Infrastructure Innovation: AWS Graviton is a processor for various cloud workloads. In addition to general-purpose processors, specific versions are available for computing, memory, graphics, and storage optimization. Amazon first announced Graviton in 2018 and has since improved it with Graviton2 in 2019, Graviton3 in 2021, and Graviton 3E in 2022. Graviton4 is 30% to 45% faster than Graviton3 for databases, web applications, and Java applications. SAP shared how Graviton powers its HANA (High-performance Analytic Appliance) database, which is popular among its customers. One observation is that Graviton Java applications get the most performance benefits when using the latest Java version. To this end, Amazon announced an AI-based Amazon Q Code Transformation tool (in preview) to help upgrade Java applications to the newest version, 17.

2. Building and Managing applications on AWS: For customers with many applications on AWS, getting a single view becomes cumbersome. Amazon CloudWatch now consolidates hybrid, multi-cloud, and on-premise metrics to overcome this challenge. The MyApplications widget monitors applications from the AWS management console.

Last year, Amazon CodeCatalyst, a unified software development service, was announced to assist development teams in quickly building and delivering applications on AWS. At the 2023 reInvent conference, Amazon added a natural language interface to assist developers in automating repetitive tasks.

Both these capabilities contribute to operational and developer efficiencies.

3. Data and Analytics: The Amazon S3 Express One Zone is one recognizable announcement. Since the data is held in a single Availability Zone, it provides a lower level of durability. The 10x better performance compared to S3 Standard will help this storage class deliver improved runtime for data-intensive AI applications.

4. The Amazon AI Stack: Amazon broke its AI stack into three categories. The first is infrastructure for model training, and inference meant to accelerate customer AI workloads. The second is AI for every product and process, built as an AI platform to assist customers in their AI journey. The final category is applications that use AI that caters to developers, line of business users, and AI specialists. These categories are described below.

a. Infrastructure for model training and inference: Generative AI models need training and inference workloads to be delivered at lower costs with speed. While NVIDIA models are ideal for speed, new-generation Inferentia and Tranium chips offer a lower-cost model. This will give customers multiple alternatives while picking the perfect infrastructure for individual AI needs. Without software, the use of AI is problematic for customers. AWS Neuron is an SDK that allows popular frameworks like TensorFlow and PyTorch to train and deploy models.

The use of AI is further simplified by Amazon SageMaker, a fully managed service that lets customers build, train, and deploy models. Amazon SageMaker Hyperpod automates the running, optimization, and streamlining of workloads to train models in parallel. Customer use cases of SageMaker Hyperpod include Workday and Perplexity AI, which use Amazon SageMaker for their large language models (LLMs). Amazon improved SageMaker Studio to add a web-based interface and streamline user onboarding and administration. Amazon SageMaker Canvas can now use a natural language interface.

b. AI for every product and process: Amazon Bedrock is a fully managed service that supports multiple foundation models (FMs), including Cohere, Anthropic, Meta, Amazon, Stability AI, and AI21 Labs. Bedrock is a serverless offering that allows users to integrate and deploy generative AI capabilities into applications. A critical step in AI adoption is fine-tuning models, and Amazon Bedrock abstracts the capability to fine-tune FMs with private datasets. Minimizing bias is a hurdle to generative AI adoption, and Knowledge Bases for Amazon Bedrock allow users to ensure that responses are contextual with company data. Finally, Agents for Amazon Bedrock enables generative AI applications to quickly orchestrate and execute tasks that are API integrated with systems of record.

A significant concern with generative AI adoption is that deploying AI unfairly impacts customers. Organizations want to ensure customized safeguards are built and implemented to match an organizational AI policy. Guardrails for Amazon Bedrock (in preview) provide additional control by helping users define denied topics and content filters.

Amazon enhanced the Titan FM by adding the Amazon Titan Image Generator, Multimodal Embeddings, and Text models to Amazon Bedrock. The Titan Image Generator includes an invisible watermark to reduce the spread of misinformation while providing the ability to identify an AI-generated image.

c. Applications that use AI: Generative AI can be embedded in various use cases, from accelerating and optimizing back-office processes to building efficiencies in customer-facing front-office interactions. Leaders in enterprise transformation strive to initiate new and innovative business units using generative AI. The need for AI crosses processes like automating software development and testing, gaining business insights, and approving customer contracts.

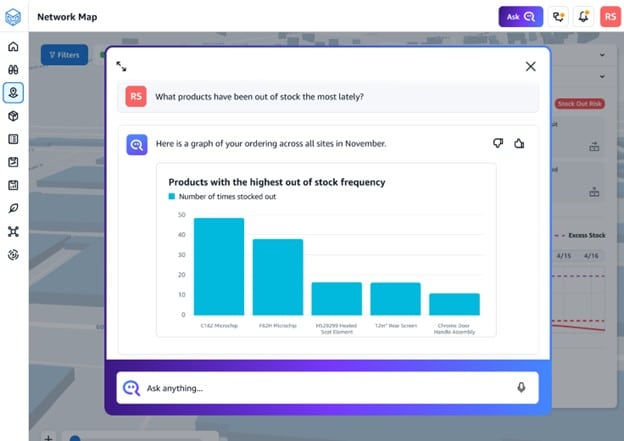

Amazon announced Amazon Q, a generative AI assistant, to assist users across various domains. At the conference, Amazon announced solutions for three personas: developers, business analysts, and contact center agents. In addition, Amazon announced the availability of Amazon Q in the supply chain, giving the ability to ask and get answers (see Figure 1 above).

The two capabilities that stand out for developers and IT professionals are embedded in Code Catalyst and Amazon Q Code Transformation. For business analysts, Amazon Q in Quicksight automates gaining insights from data. For contact center agents, Q in Amazon Connect (formerly known as Amazon Connect Wisdom) assists in improving customer satisfaction by delivering accurate information. Agents can use natural language to search across multiple data sources.

Amazon Web Services (AWS) made available a free tool called PartyRock to help users experiment with building AI apps on Amazon Bedrock, which has details in an earlier blog post.

Summary:

Amazon has a strong AI strategy to help customers. The implementations of AWS technology in the sports arena and casino demonstrate Amazon’s ability to address unique industry challenges. The breadth of services offered, ranging from application-level migration assistance to silicon-level efficiencies, will provide Amazon customers with outstanding benefits not commonly available among cloud service providers. Amazon S3 Express One Zone, combined with the improved Inferentia and Tranium chips, offers storage and compute capabilities at compelling costs, allowing customers to build new business models using Generative AI. The announcement of Amazon Q brings the value of Generative AI to a range of Amazon users, from developers to business analysts and contact center agents.

Being a leader in the market share of enterprise cloud spending, Amazon is the preferred location for data for many customers. AI workloads co-existing with data make it much more efficient for customers to use Amazon services for AI initiatives. With that in mind, Amazon customers will likely take their first steps into AI adoption with Amazon technologies. Amazon has a reputation for delivering services and quickly adapting them to customer needs based on feedback. This allows Amazon to take advantage of this fast-moving technology trend.

The technology industry is seeing a lot of action, with many large and small players entering the AI market. AWS is in an advantageous position in the AI race with its incumbency as the leading public cloud service provider. This gives Amazon customers the advantage of efficiently connecting their data to Amazon’s AI services. AWS should quickly act to assist its large existing client base to adopt AWS AI services by providing tools and techniques that enable the infusion of AI (and Gen AI) capabilities into existing applications. AWS clients can then embark on their business’s AI transformation journey without looking elsewhere.

Ram Viswanathan, Consultant and ex-IBM Fellow, contributed to this blog post.