Background

Amazon Web Services (AWS) held an analyst summit before the one-day NYC event, primarily focusing on AWS’s AI strategy. While many technology vendors have one big conference, Amazon’s practice is to supplement their annual re:Invent conference with several geographical and theme-focused events, like the security-oriented re:Inforce event. This one-day conference was attended primarily by financial service and healthcare customers. At the keynote, Swami Sivasubramaniam, VP of Database, Analytics, and ML, explained the AWS data strategy at a high level, including a graphic listing of all the AWS services contributing to a data foundation (See Figure 1 below) required for developing Generative AI applications.

This blog post provides an overview of AWS AI strategy and a deeper view of AWS HealthScribe, built using AWS data foundation services for its healthcare customers.

Amazon’s AI Strategy:

Understanding the challenges in adopting the nascent AI technology, Amazon participates in several responsible AI forums, including the White House initiative, to ensure a safe, secure, and trustworthy AI. Following are the five fundamental principles that drive Amazon’s AI offerings.

1. Enable Generative AI: Large Foundational Models (FMs) pre-trained on large amounts of data power Generative AI to create content and ideas. This field is still in its formative stages but promises transformative business capabilities. Amazon’s Bedrock makes it easy to build Generative AI applications with several foundation models (FMs). These include AI21 Labs, Anthropic, Cohere, Stability AI, and Amazon’s own Titan model. The differentiator of Amazon’s offerings is the ease of use and ability to customize an organization’s data privately.

2. Accelerate AI with Silicon: RobustCloud covered silicon innovation in an earlier blog post. To enhance efficiencies at the silicon level for AI, Amazon announced the general availability of EC2 Trn1n instances powered by AWS Trainium and EC2 Inf2 instances powered by AWS Inferentia2, optimized for ML training and inference. These instances help reduce the cost of training and running models in the cloud. The announcement marks AWS’s continued investment in its silicon, which aims to offer the most cost-effective and performant infrastructure for ML applications.

3. Increase Developer Productivity: One of the enterprises’ most desired areas for short-term productivity improvement using Generative AI is accelerating application development through developer efficiency. At the analyst briefing, Accenture shared that developers gained around 30% efficiency using coding productivity tools. Amazon has released CodeWhisperer, an AI-powered coding assistant that generates real-time code suggestions to meet this need.

4. Standardize Machine Learning infrastructure: Amazon released Sagemaker intending to make it easy to use AI, dubbed the “democratization of AI.” As a fully managed service, Sagemaker enables developers and data scientists to build, train, and deploy machine learning models at scale. An integrated environment, including data preparation, analysis, and model deployment, delivers simplicity in AI for end-to-end machine learning processes. Sagemaker Canvas, combined with Amazon Sagemaker Studio, is a no-code collaborative platform allowing teams to work together on ML models.

5. Build AI-powered Apps: AI vendors can gain customers by building AI-powered solutions directly addressing unique problems. Amazon’s Contact Center Intelligence, Intelligent Document Processing, and Amazon Personalize solutions all accelerate the enterprise journey to digitize business processes by taking advantage of AI. Some of these solutions target unique industry problems. For example, AWS Monitron reduces equipment downtime for the industrial sector.

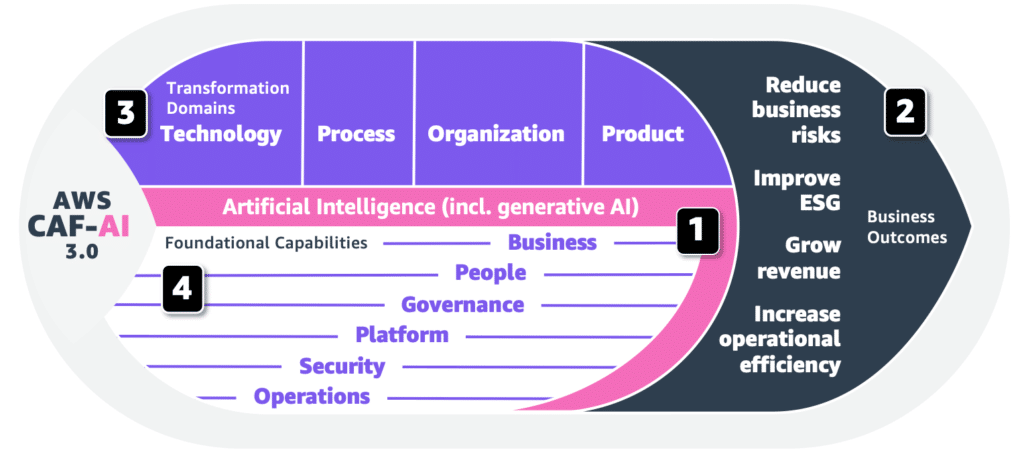

AWS has updated its Cloud Adoption Framework (CAF) for Artificial Intelligence, Machine Learning, and Generative AI (CAF-AI) based on these principles (see Figure 2 below). AWS outlines a typical customer in their AI/ML journey in this framework as organizations mature. It guides us through target state identification and realization in a gradual manner while realizing business value along the way.

With this overarching framework, AWS is enriching its existing services and developing newer AI-powered, domain-specific services. AWS HealthScribe is one such service that aims to simplify and automate the documentation requirements of healthcare providers.

AWS HealthScribe

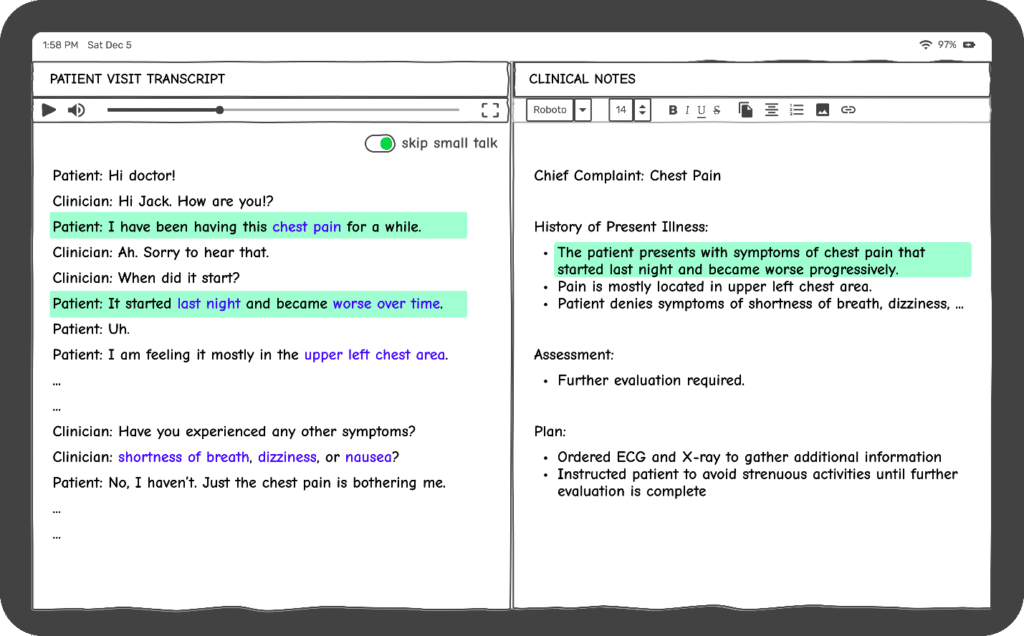

AWS HealthScribe service is an AI-powered solution that addresses a big pain point in the healthcare industry. Most physicians need help to complete documentation after patient interactions. AWS HealthScribe is a purpose-built HIPAA-eligible service that assists software vendors in developing clinical applications that can transcribe and summarize patient-clinician conversations (see Figure 3 below). It combines conversational and generative AI capabilities to reduce clinical documentation burden, cost, and experience.

AWS HealthScribe analyzes patient-clinician conversation audio recordings to provide the following:

● Produce Transcript – Rich word-by-word transcript with timestamp.

● Identify Speaker – Each speaker in the audio recording is uniquely identified and tagged in the transcript.

● Develop Summarized Clinical Notes – Analyze conversation and generate summarized notes under relevant sections like complaint, history, assessment, and plan.

● Map Evidence – Every sentence in the AI-generated summary traces back to the original conversation transcript.

● Derive structured medical terms – Structured medical terms are derived from the transcript and history, which can used for treatment and further actions.

● Segment Summary – Categorize the transcript and organize it appropriately in summarized sections.

With these consolidated capabilities, AWS HealthScribe looks to reduce onboarding costs and time to market for healthcare software vendors, some of whom, like 3M, Babylon, and ScribeEMR, already use this service in their clinical applications.

This service is still in preview and available only in certain regions. However, with the market focusing on Generative AI, services like AWS HealthScribe are bound to gain momentum in the coming months.

Summary

AI has gained much attention since the launch of ChatGPT in November 2022. Enterprises are trying to improve productivity with solutions powered by Generative AI, and IT vendors benefit from the demand growth. NVIDIA is capitalizing on the acceleration of computing demand to power AI solutions. Microsoft is offering OpenAI on Azure, and customers with an existing Microsoft relationship are trying out solutions on Azure. While Google did not release AI solutions to the market due to a lack of confidence in its products, it is now playing catch-up with multiple initiatives like Bard. Oracle has partnered with NVIDIA for infrastructure and Cohere for Large Language Models (LLMs). IBM has various AI initiatives under the watsonx umbrella. Google, Oracle, and Salesforce will likely clarify their AI strategies at upcoming events.

Regarding Amazon’s AI strategy, Amazon’s launch of the Bedrock offering with numerous supported models addresses critical pain points developers and businesses face, making AI more accessible, secure, and easy to use. With Sagemaker, the democratization of AI technology could spur the adoption of AI across a wide range of industries and application domains. The new EC2 instances make ML more accessible and cost-effective with a purpose-built infrastructure. AWS’s continuous investment in custom silicon for ML workloads could become a differentiator for enterprises of all sizes.

As enterprises prioritize investments to take advantage of Generative AI, developer productivity often rises to the top of priorities due to the ease of tool deployment and measuring the value gained. The adoption of Amazon CodeWhisperer by Persistent, a software engineering service specialist with about 20,000 employees, shows that large software development teams can trust the tool without the risk of using restrictive licensed code.

AI technologies are fast evolving and will continue to mature. Organizations need a flexible architecture adaptable to each business challenge to provide value. AWS CAF-AI framework offers an approach for organizations in their AI journey.

As enterprises look for AI-powered solutions, offerings that address specific business pain points will have an advantage over generic offerings. To this end, Amazon’s strategy of building AI-powered tools with an industry or process-specific target will resonate with customers. AWS HealthScribe is one such tool. While HealthScribe is still in its infancy, and many questions around scalability, adaptability, and compliance surface, they will likely be ironed out as the service matures with time and increased adoption.

With all leading software vendors quickly moving to garner mind share and market share, AWS AI offerings make a compelling case for clients of all sizes to have an earnest look. Systems integrators play a significant role in helping the adoption of nascent technologies, and the range of AWS services gives multiple options for systems integrators to help customers in their AI journey. The AWS AI strategy covers many innovation possibilities, opening customer and partner opportunities.

Ram Viswanathan, Consultant and ex-IBM Fellow, contributed to this blog post.